Elaborate a Neural Network

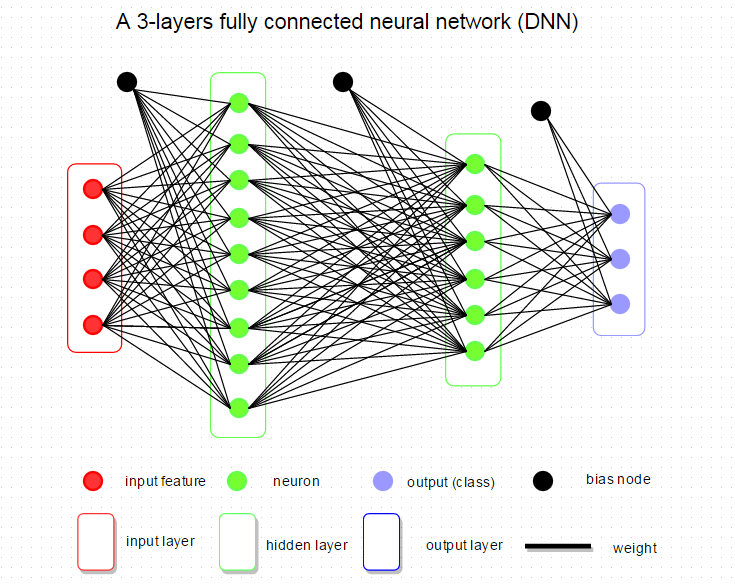

First, pick a network architecture; choose the layout of your neural network, including how many hidden units in each layer and how many layers in total you want to have.

-

Number of input units = dimension of features x(i)

-

Number of output units = number of classes

-

Number of hidden units per layer = usually more the better (must balance with cost of computation as it increases with more hidden units)

-

Defaults: 1 hidden layer. If you have more than 1 hidden layer, then it is recommended that you have the same number of units in every hidden layer.

构建 Neural Network 首先要明确要创建几个隐藏层,每个隐藏层有多少个参数。

首先输入单元个数就是输入的特征数,输出的个数就是分类的个数,每个隐藏层中单元的个数是多少?

通常意义上,隐藏层中单元的个数越多,这个分类效果越好,但是需要权衡计算与特征数的关系。默认情况,一个神经网络会存在一个隐藏层,当多余一个隐藏层的情况下,每层拥有的单元个数相同。

Training a Neural Network

- Randomly initialize the weights

- Implement forward propagation to get hΘ(x(i)) for any x(i)

- Implement the cost function

- Implement backpropagation to compute partial derivatives

- Use gradient checking to confirm that your backpropagation works. Then disable gradient checking.

- Use gradient descent or a built-in optimization function to minimize the cost function with the weights in theta.

输入的特征的权重是随机指定的(如果全部输入的权重都为一个常数,那么输入到隐藏层的值就是相同的,那么导致hΘ(x(i))也是相同的,导致symmetry。不同的初始权重就是为了Symmetry Breaking)。

实现前馈传播算法,计算出每层的x(i),实现代价函数,通过反向传播算法计算每个Θ的偏导,然后通过梯度检查测试反向传播算法是否成功,然后将梯度检查disable掉(梯度检查计算复杂度太高)。

最后使用梯度下降找到最小的代价函数值和Θ。这个就是需要的特征集。

参考:

http://blog.csdn.net/jinlianjingd/article/details/50767743

https://www.coursera.org/learn/machine-learning/supplement/Uskwd/putting-it-together